WHAT IS BIG DATA?

The world today is being ruled by data.

Have you ever wondered how,

Does Amazon recommend items for you to buy?

Does Netflix recommend movies to you?

Does Spotify recommend music to you?

Nevertheless, What is with the hype of big data, data science, machine learning?

Okay, so before you look into big data, You need to understand what data is?

Data is a straightforward reality.

When data is handled, sorted out to make it valuable, it is called information.

Prior, data was constrained and structured.

Today, the whole world is on the Internet!

Furthermore, we have an assortment of structured, unstructured, and semi-structured data.

Big Data is also data.

Data but with a huge size.

Big Data is a term used to portray information that has a huge volume.

The volume of data has exploded to unimaginable levels.

Simultaneously, the cost of data stockpiling has deliberately decreased.

The test of this period is to understand this ocean of data.

This is the place colossal data investigation comes into the image.

THREE PILLARS OF BIG DATA

Big Data Analytics generally includes

- Gathering data from various sources

- Manipulate it such that it opens up to be efficiently utilized by analysts

- Lastly, convey data items to be helpful to the associations as a matter of fact

REAL APPLICATION OF BIG DATA

Let us see some big data use cases to make our understanding clear.

Netflix Uses Big Data to Improve Customer Experience.

How does Netflix realize what you would like?

The mystery here is Data Science.

SENTIMENT ANALYSIS

Sentiment examination gives substance behind social data.

On the off chance that an organization is propelling another product,

It can discover its thought process of customers.

Regardless of whether they are happy with it or not, they might want to have a few alterations.

It very well may be discovered utilizing Big data.

CLIENT CHURN ANALYSIS

It is the estimation of the pace of wearing down in the client base of any organization.

It includes recognizing those customers who are destined to stop utilizing your administration or product.

Let us take the case of Call Center Analysis.

Here the data utilized is Call Log and Transactional data.

Numerous banks incorporate this called place data with their value-based data stockroom to decrease stir, increment sales, client checking cautions, and extortion discovery.

You see how data is helpful everywhere.

Every click you make on the Internet contributes to data.

Big Data is growing exponentially every second.

Client Churn Analysis is the estimation of the pace of wearing down in the client base of any organization

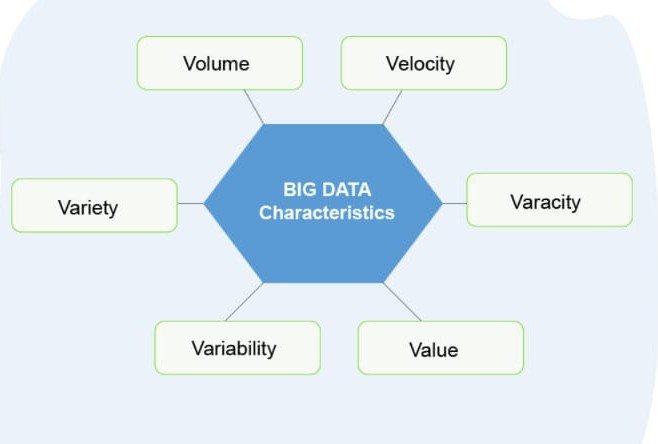

CHARACTERISTICS IN BIG DATA

There are a few terms related to big data.

They help make things even more explicit about big data.

These are known as the characteristics of Big Data.

Characteristics of Big Data include:

- Volume

- Velocity

- Variety

- Veracity

- Value

These can also be addressed as 5 V’s

Volume points to the ‘measure of data,’

Data is growing step by step at a rapid pace.

The size of data created by people, machines via social media itself is enormous.

Analysts have anticipated that 40 Zettabytes (40,000 Exabytes) will be created by 2020.

Velocity is the pace at which various sources produce the data consistently.

This progression of data is enormous and nonstop.

Did you know?

There are 1.63 billion Daily Active Users (Facebook DAU) on Mobile, which expands 22% year-over-year.

This shows how quickly the number of clients is increasing.

What is more, how quickly the data is getting created every day?

Once you deal with the velocity, you will generate insights.

Variety, As numerous sources are adding to data, the kind of data they are creating is extraordinary.

It tends to be organized, semi-organized, or unstructured.

Henceforth, there is an assortment of data that is getting produced each day.

Prior, we used to get the data from exceeding expectations and databases.

The data are presently coming as pictures, sounds, recordings, and so on as appeared in beneath picture.

Veracity is the data in doubt or uncertainty of data.

It is available due to data inconsistency and incompleteness.

In the wake of examining Volume, Velocity, Variety, and Veracity,

The next V is Value.

Data and vast chunks of data are helpful if they do not include esteem;

Alternatively, if you cannot change over it into something valuable.

By transforming it into value, I mean, Is it adding to the advantages of the associations?

CHALLENGES IN BIG DATA

Let us now see the challenges that we conquer while dealing with big data.

The most obvious one being the VOLUME.

Data quantity

Well, not only the quantity of the data,

Quality of data can be a challenge too.

We collect data from many various data sources.

Hence The data can be very messy, inconsistent, and incomplete.

STORAGE:

- The more data an association has, the challenging it can get.

- The question that emerges here is “The place to store it?”.

- We need a capacity framework that can, without much of a stretch, scale up or down on request.

DISCOVERY A PATTERN:

- Discovering bits of knowledge on Big Data;

- It is quite similar to finding a needle in a haystack.

- Analysis petabytes of data utilizing incredibly ground-breaking calculations to discover examples and bits of knowledge are exceptionally troublesome.

LACK OF TALENT:

- There are enormous Big Data projects with insignificant associations.

- A complex group of designers, data researchers, and analysts who likewise have adequate domain knowledge are as yet a test.

BIG DATA SECURITY:

- Since the data is gigantic, keeping it secure is another test.

- It incorporates user verification, confining access dependent on a user, recording data get to narratives, legitimate utilization of data encryption, and so on.

There is a silver lining to all clouds. Big data challenges, although huge, can be addressed. Hadoop comes as a savior in this.

Veracity is the data in doubt or uncertainty of data

WHY BIG DATA ANALYTICS?

We create about 2.5 quintillion bytes of data every day!

Every click we make online contributes to data.

Since we have an enormous amount of data

It will be sheer injustice if we let it sit idle.

Hence Many organizations analyze this data to get benefit out of it.

This very analysis is known as Big Data Analytics.

The first reason for analyzing the data is:

Making Smarter and More Efficient Organization.

Many organizations use Data to solve their issues.

They analyze historical patterns and then map them with relative events.

Big Data and analytics strategy help them identify the cause and solution.

Upgrade Business Operations by Analyzing Customer Behavior

Most associations utilize analysis of clients to give consumer loyalty.

The best case of this is Amazon.

Amazon is a truly excellent and most generally used internet business site.

They use client click-stream data and historical purchase data to furnish them with redid results on tweaked website pages.

Data causes them to improve client experience

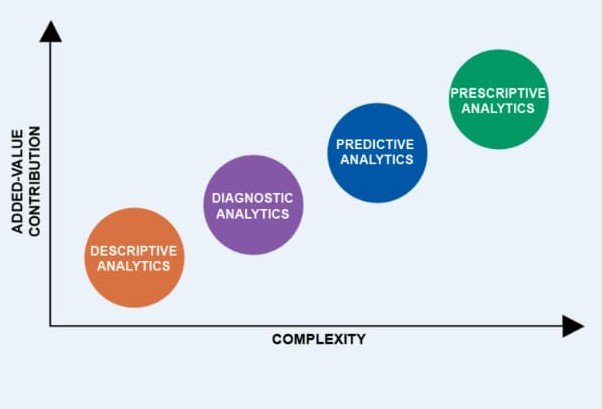

Types of Big Data Analytics

Big Data Analytics

Big data analytics test large and different types of data.

It uncovers hidden patterns, correlations, and other insights.

Big Data Analytics is primarily used in organizations to facilitate their growth and development.

This majorly involves applying various data mining algorithms to the given set of data.

It then aids them in better decision making.

Types of Big Data Analytics

There are four types:

- Descriptive Analytics

- Predictive Analytics

- Prescriptive Analytics

- Diagnostic Analytics

Descriptive Analytics

It utilizes data collection and data mining to give understanding into the past and answer:

“What has occurred?”

The descriptive analytics does exactly what the name implies they “describe.”

They summarize raw data and make it interpretable by humans.

Predictive Analytics

It utilizes measurable models and estimates procedures to comprehend the future and answer:

“What could occur?”

Predictive analytics gives organizations noteworthy bits of knowledge dependent on data.

It gives gauges about the probability of a future result.

Prescriptive Analytics

It utilizes advancement and recreation calculations to counsel on possible results and replies:

“What should we do?”

It permits clients to “endorse” various potential activities and guide them towards an answer.

More or less, this analytics is tied in with giving exhortation.

Diagnostic Analytics

It is utilized to decide why something occurred previously.

“Why did it happen.”

Procedures describe it,

For example, drill-down, data revelation, data mining, and connections.

Diagnostic analytics investigates data to comprehend the main drivers of the occasions.

TOLES OF KEYNOTE HOLDERS

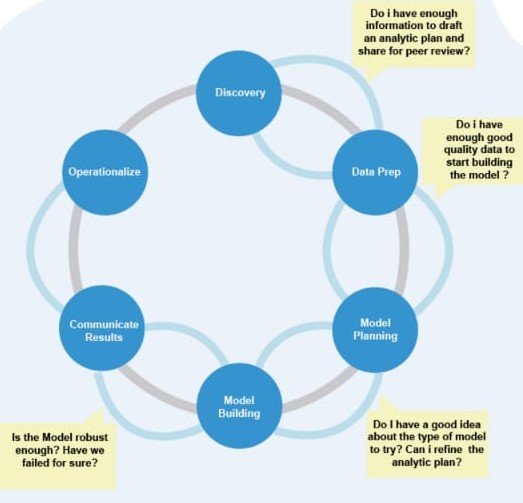

The Data Analytics Lifecycle is explicitly designed for Big Data problems.

The lifecycle has six phases.

Before we learn about the lifecycle

Let’s learn about the Roles for successful implementation of it.

Business User

- Somebody who comprehends the domain area.

- It is, for the most part, profits by the outcomes.

- This individual can counsel and prompt the task group on the setting of the venture, estimate the outcomes, and how the yields will be operationalized.

Project Sponsor

- Liable for the beginning of the project.

- It gives the driving force and prerequisites to the task and characterizes the center business issue.

- For the most part, it gives the financing and measures the level of significant worth from the last yields of the working group.

- This individual sets the needs for the task and explains the excellent yields.

Project Manager

- Ensures that key milestones and objectives are met on time.

- Moreover, the expected quality is maintained.

Business Intelligence Analyst

- Gives business area skill dependent on a profound comprehension of the data, key execution markers (KPIs), key measurements, and business insight from a revealing point of view.

- Business Intelligence Analysts, for the most part, make dashboards and reports and know about the data feeds and sources.

Database Administrator (DBA)

- Arrangements and designs the database condition to help the analytics needs of the working group.

- These obligations may incorporate giving access to key databases or tables.

- Also, guaranteeing the appropriate security levels are set up identified with the data storehouses.

Data Engineer

- Use profound specialized abilities to help with tuning SQL inquiries for data the executives and data extraction.

- The data engineer works intimately with the data scientist to help shape data correctly for examinations.

Data Scientist

- Gives topic mastery to logical procedures, data displaying, and applying legitimate explanatory methods to given business issues.

- Guarantees by and large analytics destinations are met.

- Structures and executes analytical techniques and approaches with the data accessible to the venture.

PHASES OF LIFECYCLE

The Data Analytics Lifecycle defines analytics process best practices. This is designed specifically for big data problems.

The lifecycle has six phases, Starting from discovery to project completion.

Phase 1—Discovery

In Phase 1, the group learns the business domain, including significant history.

For example,

Regardless of whether the association or specialty unit has endeavored comparative activities in the past, they can learn.

The group surveys the assets accessible to help the undertaking as far as individuals, innovation, time, and data.

Practical exercises in this stage incorporate encircling the business issue as an analytics challenge.

Phase 2—Data preparation

Phase 2 requires the nearness of an analytic sandbox.

In it, the group can work with data and perform analytics for the length of the task.

The group needs to execute concentrate, load, and change (ELT) to get data into the sandbox.

The group likewise needs to acclimate itself with the data completely.

They need to find a way to condition the data.

Phase 3—Model planning

The group decides the strategies, procedures, and work process.

These are meant to be followed for the consequent model structure stage.

The group investigates the data to determine the connections among factors and chooses vital factors and the most reasonable models.

Phase 5—Communicate results

In Phase 5, the group, as a team with significant partners, decides whether the task’s consequences are a triumph or a disappointment dependent on the rules created in Phase 1.

The group ought to recognize fundamental discoveries, measure the business esteem, and build up a story to abridge and pass on discoveries to partners.

Phase 6—Operationalize

In Phase 6, the group conveys last reports, briefings, code, and specialized records.

Likewise, the group may run a pilot task to actualize the models in a creation situation.

Operationalize is the part of the big data phase

BIG DATA SOURCES

One of the biggest challenges is finding data sources.

A data source that’s appropriate for analytics processes.

As the name implies, Big Data is large, but the size is not the only concern.

There are several other considerations when deciding how to locate and parse Big Data sets.

Considerations should include the following:

- Structure of the data (structured, unstructured, semi-structured, table-based, proprietary)

- Source of the data (internal, external, private, public)

- Value of the data (generic, unique, specialized)

- Quality of the data (verified, static, streaming)

- Storage of the data (remotely accessed, shared, dedicated platforms, portable)

- Relationship of the data (superset, subset, correlated)

Discovering data for Big Data analytics is a mix of three aptitudes.

- part science

- part analytical work

- part supposition

The absolute most evident hotspots for data are electronic exchanges, site logs, and sensor data.

Any data the association accumulates while working are incorporated.

The thought is to situate however many data sources as could reasonably be expected and bring the data into an analytics stage.

Extra data can be accumulated by utilizing system taps and data replication customers.

The more data that can be caught in a perfect world, the more data to work with.

Finding the internal data is the easy part of Big Data.

It gets more complicated once data considered unrelated, external, or unstructured are bought into the equation.

With that in mind, the big question with Big Data now is,

“Where do I get the data from?”

Every project usually starts with a goal.

Big Data analytics should be no different.

However, defining the goal can be a complicated process.

Especially when the goal is vague and amounts to little more than something like “using the data better.”

It is imperative to define the goal before hunting for data sources.

Take, for instance, a retail association.

Big Data analytics’s objective might be to build deals, an errand that traverses a few business belief systems and offices, including promoting, valuing, stock, publicizing, and client relations.

Once there is an objective as a primary concern, the following stage is to characterize the destinations and the specific methods to arrive at the objective.

STORAGE DILEMMA

We already know that storage of BIG DATA is challenging.

To handle this, we need to work on some fundamental techniques.

Understanding how those storage design elements interact with Big Data demands.

Capacity

Big Data can mean petabytes of data.

Therefore, big data storage systems must be able to quickly and easily change the scale to meet the growth of data collections.

These storage systems will need to add capacity in modules or arrays transparent to users without taking systems down.

Security

Numerous sorts of data convey security principles that are driven by consistent laws and guidelines.

The data might be money related, clinical, or government insight or a piece of an analytics set.

It yet still ought to be ensured.

Latency

By and large, Big Data utilizes an ongoing segment.

It is mainly used in situations, including Web exchanges or monetary exchanges.

Latency produces “stale” data.

Access

As organizations show signs of improvement in comprehension of the capability of Big Data examination,

The need to think about various data sets increments; what is more, with it, more individuals are become tied up with the data-sharing circle.

Persistence

Enormous Data applications regularly include administrative consistence necessities,

Which direct that data must be put aside for a considerable length of time or decades.

Models are clinical data, which is frequently put aside for the life of the patient.

In any case, Big Data clients are regularly sparing Data longer since they are a piece of a chronicled record or are utilized for time-sensitive investigation.

Cost

Extensive Data can be costly.

Given the scale at which numerous associations are working their Big Data conditions, cost regulation is necessary.

YOU STILL ARE NOT GETTING THE DIFFERENCE BETWEEN

Big data, Data Science and Data Analysis.

No issues, Here I am going to defined all these terms agaom!

What Is Data Science?

Data Science is a blend of various tools, algorithms, and machine learning principles to discover hidden patterns from the raw data.

Data Science is a broad term.

It comprises statistics, data visualization, big data, machine learning, and much more.

Data science is a big umbrella covering each and every aspect of data processing.

Data Science is exploratory and useful in getting to know the data.

Automated methods are used to analyze massive amounts of data.

You can say, data science is the father of all (data analytics, data analysis, and data mining).

What is Data Analytics

Data analytics is a process in which large data sets (Big Data)… are collected, organized, and analyzed to discover useful patterns.

These patterns provide useful information that can help a company to produce future decisions.

Data analytics are techniques of data analysis.

These techniques include algorithms and data mining methods.

What is Big Data?

Big Data refers to large amounts of data.

It is pouring in from various data sources and has different formats.

It is something that can be used to analyze the insights, which can lead to better decisions and strategic business moves.

WHAT IS DATA SCIENTIST?

Data Scientists perform an exploratory analysis to discover insights from the data.

They also use various advanced machine learning algorithms to identify a particular event’s occurrence in the future.

This involves identifying hidden patterns, unknown correlations, market trends, and other useful business information.

The responsibilities of big data professional lie around dealing with a huge amount of heterogeneous data,

Which is gathered from various sources coming in at a high velocity.

Big data professionals describe the structure and behavior of a big data solution.

It can be delivered using big data technologies such as Hadoop, Spark, Kafka, etc., based on requirements.

Data analysts translate numbers into plain English.

Every business collects data, like sales figures, market research, logistics, or transportation costs.

A data analyst’s job is to take that data and help companies make better business decisions.

How data can affect IT

Data assumes a gigantic job in understanding significant bits of knowledge.

From each collaboration with innovation, whether or not it is dynamic or uninvolved, we are making new data to portray us.

Whenever investigated accurately, these data focuses can clarify a great deal about our conduct, characters, and life occasions.

The idea of big data and its significance has been around for quite a long time, be that as it may, as of late has innovation empowered the speed and effectiveness at which huge arrangements of data can be examined.

Big data will change how even the littlest organizations work together.

Data assortment and understanding become increasingly open.